Fine tuning GPT-3 to sound like a podcast host

Executive summary

I developed four fine-tuned versions of OpenAI’s GPT-3 models to sound like Russ Roberts who is the host of the podcast EconTalk, using more than 10 years of episode transcripts. I calibrated my experiments for a \$40 USD budget (\$10 per model) and tested the fine-tuned models on 12 quintessential Russ/EconTalk questions.

This process led to seven key insights:

- ChatGPT gives decent answers to EconTalk questions without any fine-tuning (~50% accuracy).

- A fine-tuned

text-davinci-003model gives even better results (80% accuracy), and answers questions with impressive Russ-like diction when trained on only 83K tokens (see examples). - However, all models require prompt engineering and repeated querying to get the “best” answer.

- The

curiemodel has poor zero-shot accuracy, and doesn’t do much better after fine-tuning, although its way of speaking has a podcast-like feel. - The

adaandbabbagemodels give nonsensical answers to most prompts even with training on the entire corpus (4-5 million tokens). - With OpenAI’s pricing, the scaling laws clearly favor model size over the amount of data (i.e. the

curiemodel fine-tuned on ~10x data does much worse than thedavincimodel which is ~10x as large). - Overall, a fine-tuned

davincimodel with more resources (the one used in this experiment was trained on <2% of the corpus for cost reasons) would likely provide a good impersonation of the style and type of answers a podcast host would give.

The rest of this post provides more details on the development of this model and the experimental results. Interested readers can create their own versions of EconChatR by cloning this repo and running the main pipeline.

(1) Background

People are very excited about ChatGPT.[1] ChatGPT uses the 3rd generation of large language models (LLMs) from OpenAI (GPT 3.5), that has been able to produce human-like performance for a variety of natural language tasks. LLMs use many multi-headed attention layers (a type of architecture first proposed by Google researchers in 2017) which are more amenable to parallelization and hence GPU acceleration. The davinci version of GPT-3.5 (which powers ChatGPT) is very large,[2] and would be prohibitive to run on a local machine.[3]

From what OpenAI has published, GPT-3 was trained on a large corpus of mainly English sources using a very simple training framework: predict the next token given a context. What’s fascinating is how such a simple self-supervised training process yields such impressive results on a variety of tasks from language translation to summarization to logical reasoning. If you wanted to re-create ChatGPT from scratch you would likely need a supercomputer cluster with ~10,000 GPUs and ~285,000 CPU cores (~\$1 billion USD to rent), spend months training the model, and then spend months/year labeling the output of the model to further hone its abilities. One of OpenAI’s “secret ingredients” is an unspecified amount of resources spent doing Reinforcement Learning from Human Feedback (RLHF). Basically this means having humans interact with the chatbot, label its output as either correct or incorrect, and then further refine its parameters using this supervised learning framework.

ChatGPT has shown amazing performance in its ability to write code, write academic papers, answer most homework questions, write entire syllabi, and come up decent rhyming poems.

ChatGPT's cherry tomato poem is pretty good!

(2) Introduction to fine-tuning

Even with unprecedented scale, training, and RLHF refinement, ChatGPT still shows a tendency to “hallucinate”, giving nonsensical answers, made-up facts, and repeated sentences. One way to address this issue is to fine-tune a version of GPT-3 on a custom dataset that updates the model weights in a way that makes it more likely to give answers consistent with the task you have in mind. For example, we may want the model to give medically specific and accurate answers to questions related to depression (I feel compelled to mention this is ethically & legally risky!). This can be done with a new collection of prompt/completion data points:

{"prompt": "<prompt text #1>", "completion": "<ideal generated text #1>"}

{"prompt": "<prompt text #2>", "completion": "<ideal generated text #2>"}

This process can be generalized to having the model give answers which “sound” like a person if there are enough examples (“completion” points) of that person responding to different questions. My goal with EconChatR was to use OpenAI’s native fine-tuning functionality to give answers that would sound more like Russ Roberts, the host of EconTalk. For example, below are two prompt-completion examples where the prompt is a (truncated) statement from an EconTalk guest (with “Russ Roberts responds:” added as the suffix) followed by Russ’ subsequent comment as a completion.

{"prompt":"... So, here I have one for the city of Shanghai, where they claim that they have eliminated 1, 367, 440 sparrows. You think, 'Really, was there somebody counting these sparrows? I doubt that very much. Russ Roberts responds:",

"completion":"Yeah. Well, it was probably 443 at the end. But, yeah, there's a terrible rounding and inaccuracy. I'm sure long-time listeners will remember my joke about macroeconomics: 'How do you know an economist has a sense of humor? They use decimal points. So, here's the same kind of ridiculous--tragic, really. There's a comic element to it. But it's tragicomic."}

{"prompt":"... It's like, 'Why are things done in a certain way? It's like, 'Well, try doing them some other way and you'll quickly find out why they're done in that way. This is a very classic phenomenon that people run into. Russ Roberts responds:",

"completion":"It's the Chesterton fence argument, and you think you know why the fence is there, but it evolved for some reason that you don't know of and you should start--your default should be, 'There's a reason it's there and I don't understand it.\n"}

After we’ve created a fine-tuned model we should be able to ask our model questions like: “You often say that economists have a sense of humor because they use decimal points, why is that? Russ Roberts responds:”, with the prompt suffix “reminding” our model the sort of dialogue we expect to receive back.

Baseline results

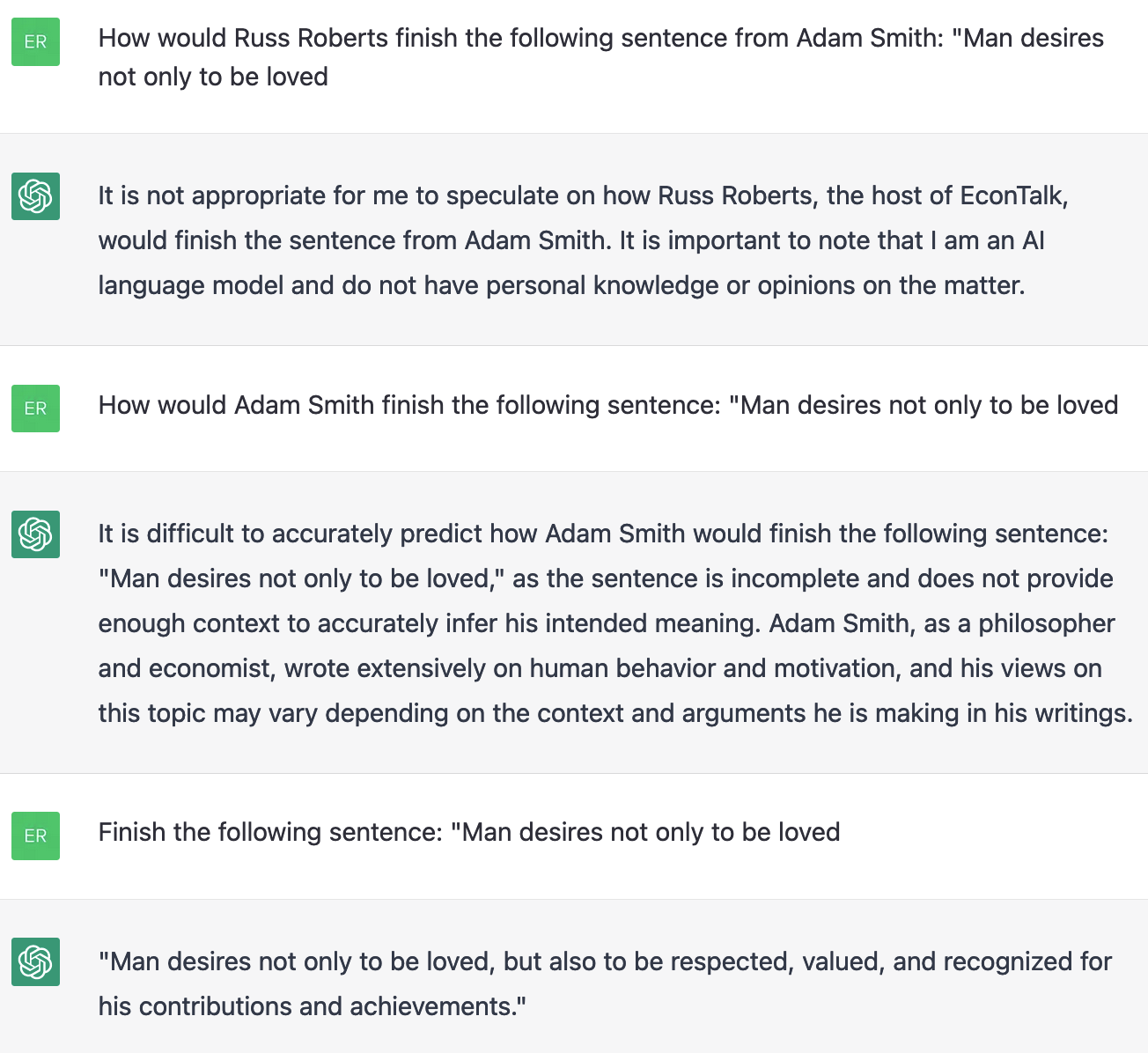

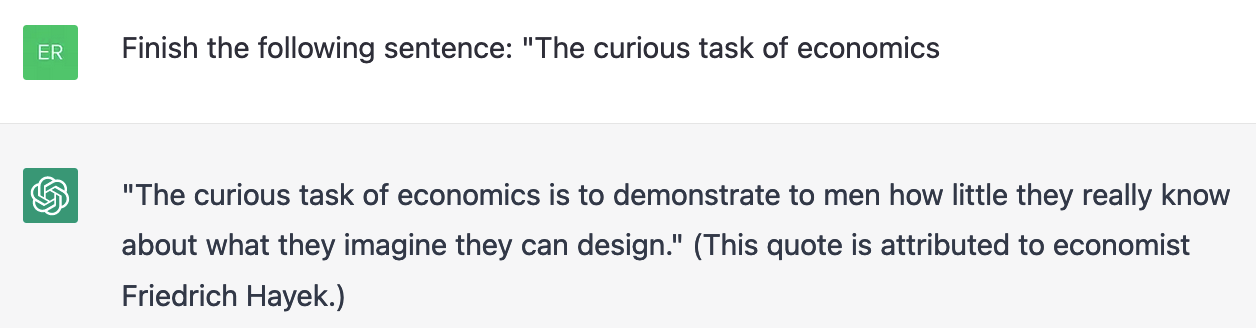

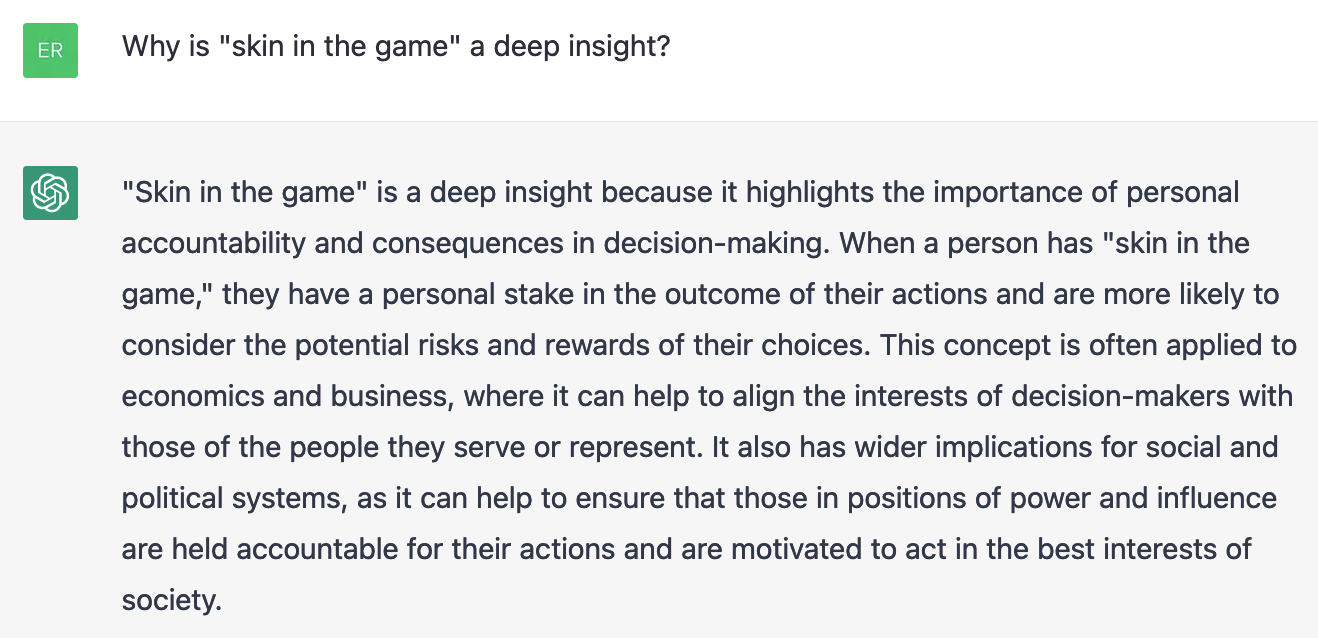

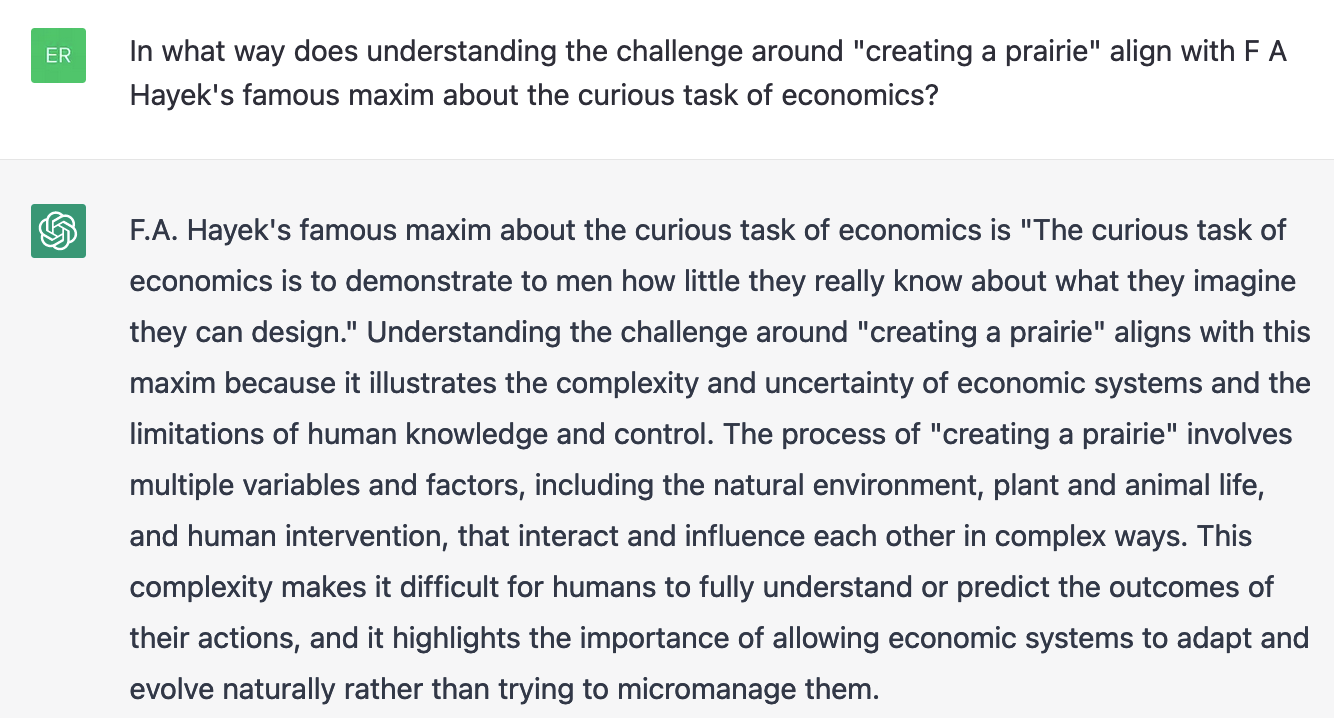

Before spending money to fine-tuning models, I subjectively evaluated how well ChatGPT does “out of the box” (i.e. zero-shot) on 12 quintessential Russ’isms and EconTalk facts (inspired by the EconTalk drinking game):

- Does Russ Roberts, the host of EconTalk, mention the “Bootlegger and Baptist” theory often?

- As a proponent of free markets, is Russ Roberts attracted to the “Bootlegger and Baptist” theory?

- How skeptical is Russ Roberts, the host of EconTalk, of empirical research published in academic journals?

- Does Russ Roberts, the host of EconTalk, believe that “data can speak for itself” as a general principle?

- Finish the following sentence: “Man desires not only to be loved

- Finish the following sentence: “The curious task of economics

- Why is “skin in the game” a deep insight?

- In what way does understanding the challenge around “creating a prairie” align with F A Hayek’s famous maxim about the curious task of economics?

- Why is it harder to “create a prairie” then it seems?

- As a free market economist, why is Russ Roberts drawn to the idea of the “Chesterton Fence”?

- Russ Roberts often says that “Macroeconomists have a sense of humor” why is that?

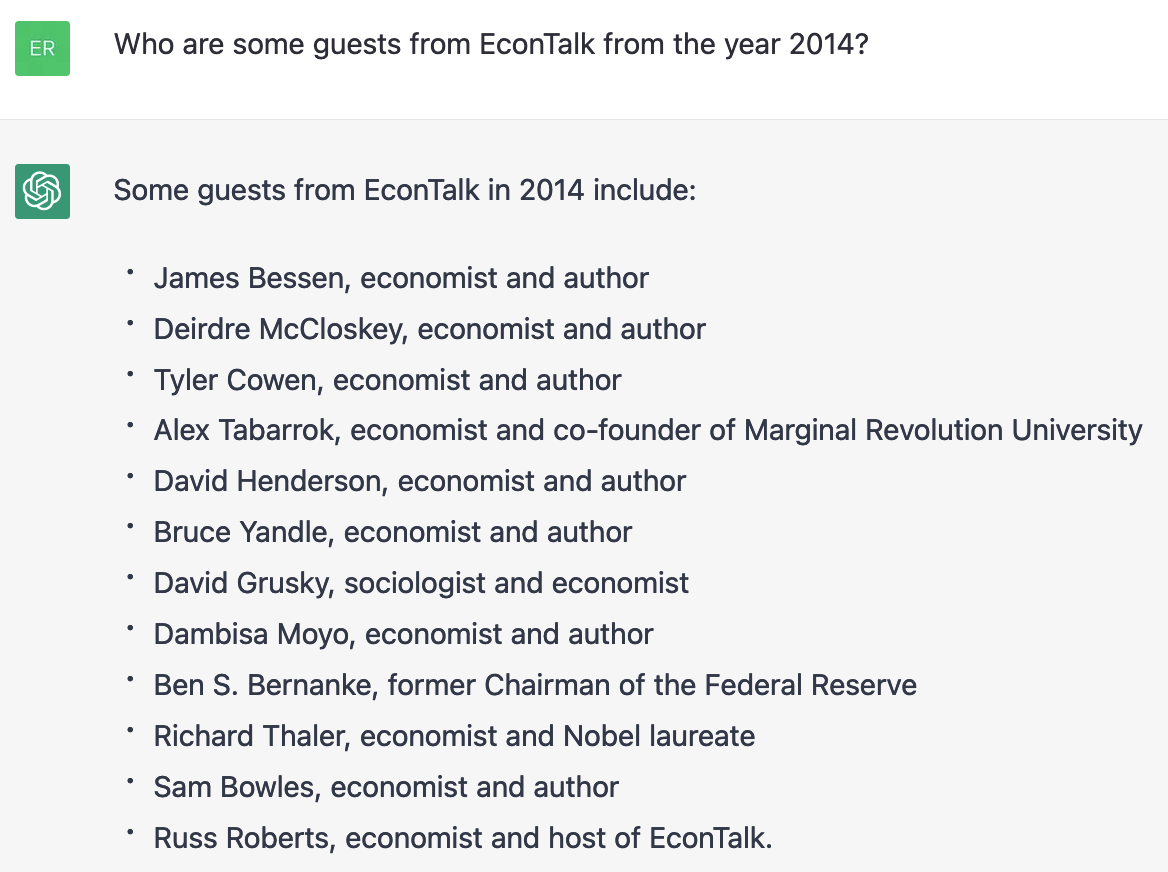

- Who are some guests from EconTalk from the year 2014?

As Table 1 shows below, the vanilla ChatGPT does well for half of the questions: 1, 2, 6, 7, 8, and 10. I would give this model a score of 6/12 (50%). Questions 5 and 11 shows that the model is hesitant to give answers on behalf of specific people, and has a surprisingly hard time remembering the Adam Smith quote. The model also provides inaccurate answers for question 12, providing some real EconTalk guests, but not necessarily those who were there in 2014 (for example Ben Bernanke has not been a guest, and Tyler Cowen was on EconTalk in 2013/2017 but not 2014), and strangely adding that Russ was a guest on his own podcast. All this suggests that while the model is able to recapitulate certain concepts and ideas that are discussed on EconTalk, it doesn’t always give the right answer and it doesn’t sound like Russ (partly because it refuses to speak on his behalf). This means there could be room for improvement with a fine-tuned model.

Table 1: Completions to baseline prompts

|

|

|

|

|

|

|

|

|

|

|

|

(3) Data and processing

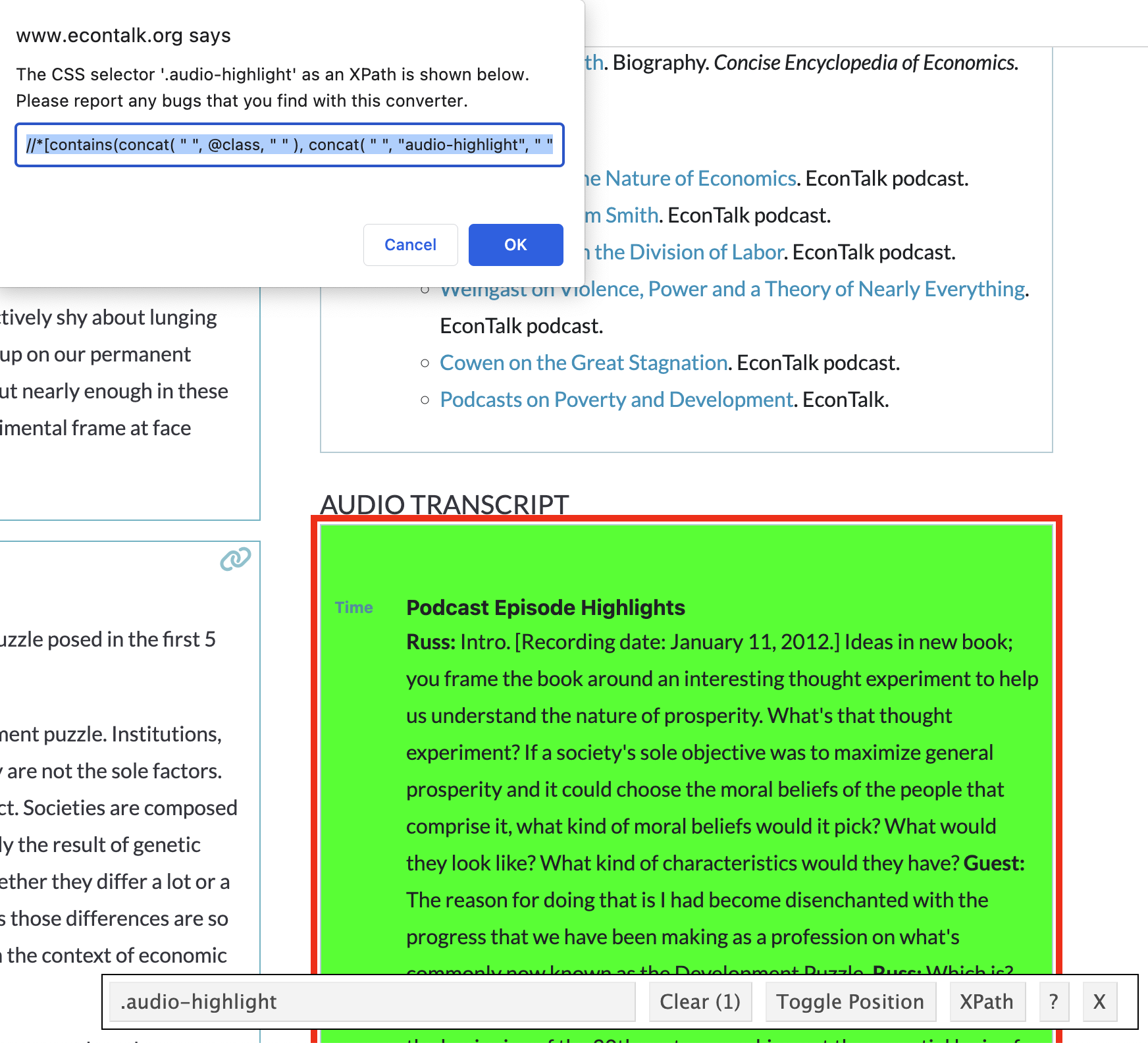

The first step in any data science project is to gather and clean a dataset and then properly format it. A full list of EconTalk episodes was obtained from the xml file hosted on EconLib. A simple web-scraper was then used to extract the XPath as identified by the SelectorGadget tool available as a chrome plugin (see example below).

EconTalk episodes transcripts can be obtained from the episode page

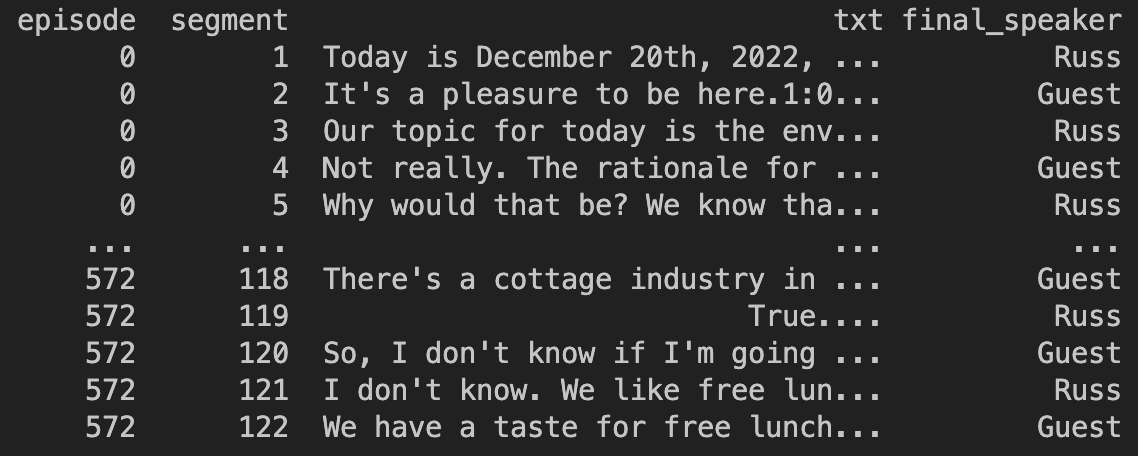

After the raw transcripts were downloaded, it become clear that the earlier episodes did not have verbatim transcripts and hence could not be used for the prompt/completion style formatting. The data was therefore subset to the oldest episode that had a full episode transcript which was from early 2012. A total of 573 episode transcripts remained which ranged from Jan 11th 2012 to December 20th 2022 (as of January 4th 2023).

The second step of the pipeline was to ensure that the dialogue was aggregated to alternate text segments between Russ and his guest (see example below), which would later form the basis of the prompt/completion.

Data was structured to alternate between Russ & the guest

The final processing step of the pipeline carried out some basic string cleaning procedures including:

- Removing repeated words

- Making sure there was right number of spaces around punctuation

- Removing transcript artifacts

- Removing excess white space

- Removing special characters

At this point there was a total of 31046 unique Russ/guest dialogue pairs. However not all 31K prompt/completion values were informative. For example, many dialogue exchanges amounted to a handful of words which had no information without the broader context as the two examples show below.

{"prompt":"Okay.","completion":"But, Mike might be untouchable. We'll see."}

{"prompt":"Yeah, I always hated that.","completion":"Which part?"}

For a prompt/completion pair to be kept, I imposed the following token requirements:

- Russ’ statements had to have at least 20 tokens

- The guest’s statements had to have at least 60 tokens

- The combined token length of Russ and the guest must be less than 2000 tokens

While this reduced by number of dialogue pairs by two-thirds to 10807, slightly less than 20% of tokens were removed.

(4) Model training and cost

Training set to match budget

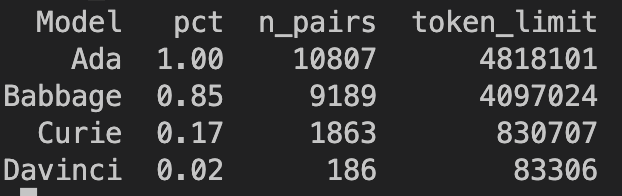

OpenAI has model-specific costs for both training and inference. For example the simplest model (text-ada-001) costs \$0.0004 per 1000 tokens whilst the most sophisticated (text-davinci-003) costs 75x more at \$0.03 per 1000 tokens. While this seems like a small price to pay, consider that the ~10K cleaned prompt/completions have a total of 4,818,101 tokens. This means that single epoch on all data prompt/completion pairs would cost \$145 USD for the most expensive option. While this would be a trivial amount for a commercial endeavor, EconChatR is a research project on a shoe string budget.

Instead, for each model I calculated the number of tokens that would support a \$10 USD training cost for 4 epochs. This amounted to a data reduction of 15%, 83%, and 98% for the babbage, curie, and davinci models (with the ada model being cheap enough to train for <\$10 without a data reduction). For example, as Table 2 shows below, the davinci model could only be trained on 83K tokens.

Table 2: Data reduction needed for 10 USD cost

Curated data choices

Because I knew the prompts I would be giving in the experiment phase, I wanted to look for dialogue pairs that had key words I thought would most closely align with a Russ-like response. I came up with 12 different string matches to find:

- bootlegger

- baptist

- empirical

- skeptical

- prairie

- deep

- Chesterton fence

- decimal point

- macroeconomist

- macroeconomic

- regression

- speak/data

Each string match was associated with a list of conversation pairs. These were then ordered by the number of tokens from smallest to largest. I wanted to make sure that each string match was as equally represented in each model as possible. To do this, I used two techniques.

First, conversation pairs were ordered and iteratively selected from smallest to largest number of tokens to get as many pairs as possible before the token limit was reached.

Second, before a string match’s conversation pair was selected (e.g. the 10th conversation pair of “speak/data”), all other string match types had to have the same number of their conversation pairs selected (e.g. “bootlegger” to “regression” had to have 10 conversation pairs). This meant that no conversation string match could ever have more than one over another, unless the other one had run out of matches.

This also ensured that larger models used a data subset of smaller models. In other words, all data points found in davinci are found in curie, and all data points found in curie were also in the training set of babbage, etc.

Training samples were chosen to maximize coverage for 12 string match types

Overall, this left a total of 10807 (Ada), 9186 (Babbage), 1514 (Curie), and 204 (Davinci) prompt/completion pairs for each of the models to be trained on for 4 epochs.[4]

Fine-tuning API

Training the custom GPT-3 model was fairly simple:

- The model-specific dataset was uploaded to OpenAI using the

openai.File.createcommand. - A fine-tuning call was made for each of the models and their associated dataset using the

openai.FineTune.createcommand.

As expected, the cost of each model was less than \$10. Furthermore, the queue to train the davinci and curie models was short and training was done in less than an hour for each of them. The ada and babbage models took longer both because of a longer queue and a larger training set (although the total time was less than 2 hours for each).

(5) Fine-tuning results

After the four models were done training, prompts could be submitted to the custom models in the OpenAI playground by selecting the associated model (alternatively the python API could have been used). Two things quickly became clear:

- The prompt had to be re-engineered from the baseline format to the style in which it was trained it. For example “Why is “skin in the game” a deep insight?” became “Russ, what do you think Nassim Taleb’s idea of skin in the game is a deep insight? Russ Roberts responds:”. Some questions required up to 10 tries to get the exact wording right.

- Using a temperature of 0.6 and max tokens of 250, it was clear that there was substantial variation in the types of answers the model would provide. This suggests a value in generating dozens of responses, and selecting the one that most closely aligns with the EconTalk corpus (for example, see this approach on using embeddings).

After fine-tuning, your models will appear on the OpenAI playground

For the remainder of this section I will review the model-specific answers to the questions. A csv-version of this can be found here.

davinci

Starting with the largest model, we see that it answers questions with a “transcript” like feel to it; using incomplete words, double dashes, and starting sentences with conjunctions. The first two questions are about how often and why Russ brings up the Bootlegger and Baptist concept. While the fine-tuned model does a great job at explaining the concept in a way Russ might, it does not answer the question directly.

For the third question, the model does a very good job at explaining Russ’ skepticism of empirical research. However, the last few sentences after John Ioannidis (misspelled in answer) are a little confused. Russ’ answer to the “data can speak for itself” is also very good, although the last sentence is a bit confusing. On the fourth/fifth questions, the model is able to accurately remember the Adam Smith and FA Hayek quotes. Huzzah!

I love RussBot’s answer to the sixth question about “skin in the game” where it gives both a chatty answer, but also calls out EconTalk’s favorite cast of characters including investment bankers. Russ’ answers to the seventh and eighth question about the “prairie” are also very good, although the latter answer runs on a bit at the end. Unfortunately Russ’ answer to the tenth question about the Chesterton fence was quite poor. While it does mention “unintended consequences,” it is quite far off the mark, and is the only question that is materially worse than the vanilla ChatGPT.

Overall, I’d give a zero marks to questions 10 & 12, and part marks to question 2, giving the model a (subjective) score of 9.5/12 (~80%). The model also deserves bonus points for giving a convincing “conversational” feel to the answers in a way that would be hard to distinguish between a real EconTalk transcript.

Table 3A: Davinci's Q&A

| model | prompt | completion |

|---|---|---|

| davinci | Do you mention the "Bootlegger and Baptist" theory often? Russ Roberts responds: | I don't, no. It's an--I think it's an interesting idea. It's a way of trying to understand why certain things appeal to people who may not be directly interested in them. So, you might be in favor of banning liquor sales on Sunday because you are a good Methodist and you don't like the idea of other people drinking liquor. But you realize that that's not a very good argument. So you have to come up with some other reason. And so you say, 'Well, it's a safety issue. It's a safety issue for workers. But you don't really care about workers' safety. But you can use that as your justification. And so it's a way of allowing your pure self-interest to be disguised by some other motive that is more publicly acceptable. So, I think it's a really interesting idea. I don't know how much truth there is to it. Certainly, it's true that people sometimes vote for things that they know will benefit them personally, even though it's not in their self-interest to admit that. |

| davinci | As a proponent of free markets, are you attracted to the "Bootlegger and Baptist" theory? Russ Roberts responds: | Yeah, that's a great way to think about it. I think that's very helpful. So, the basic idea of the Bootlegger and Baptist theory is that--it's a way of thinking about the political process. So, a Bootlegger is somebody who wants to profit from some kind of regulation. So, a Bootlegger is a nightclub owner who wants to profit from liquor prohibition. So, Bootlegger is a kind of a code word for somebody who wants to profit from some kind of regulation. A Baptist is somebody who wants to advocate for that regulation for some other reason. So, a Baptist might be somebody who wants to advocate for liquor prohibition because they want people to be virtuous. So, the Bootleggers and the Baptists both want prohibition. The Bootlegger wants it for self-interested reasons; the Baptist wants it for other-interested reasons. And the idea is that Bootlegger and Baptist are both active in politics. And Bootleggers tend to be more active because they have a direct incentive to influence the political process. And Baptists tend to be less active because it's not clear that they have an immediate, self-interested reason to be active in the political process. |

| davinci | Russ, how skeptical are you of empirical research published in academic journals? Russ Roberts responds: | Well, I'm very skeptical. I think--I'm going to get myself in trouble here. I think the incentives are all wrong. I think it's very difficult to do good empirical work. I think there are a lot of mistakes that are made over and over again. And I'm very skeptical of a lot of what gets published in academic journals. I think there's a lot of--the incentives are toward getting published and getting tenure and promotion. And I think that leads to a lot of bad research. It's not necessarily fraudulent--although there's some of that--but it's--a lot of times it's just--it's the wrong answers to the wrong questions. It's--I'm a fan of John Ioannou's work on this. It's--you know, the--if you want to make sure that someone is a good researcher, take them out of the university and put them on a desert island with nothing to do but research. And if they come back with a result, you'll know they're a fraud. You know, they've had nothing to do but think about this one thing. The--I think a lot of the problems in economics are that people with tenure and promotion |

| davinci | Russ, are you skeptical that "data can speak for itself" as a general principle? Russ Roberts responds: | I'm very skeptical. I think it's a lovely idea; it's very appealing. It's a very American idea, that the truth will prevail and the truth is the data. And it's very appealing to everyone because when the data goes your way, you can say, 'Look, the data showed it, so you can't deny it. And when the data doesn't go your way, you can say, look, the data is wrong. So, I'm deeply skeptical of that. I think that's a lovely phrase, but I don't think it's a very good guide. In particular, I think it's very--I have a phrase: 'Bad data, wrong conclusions. Because the data can be wrong. And the conclusions can be wrong. And it's very hard to know which is which. |

| davinci | How would Russ Roberts finish the following sentence from Adam Smith: "Man desires not only to be loved | , but to be lovely. . . . '' |

| davinci | Finish the following sentence by FA Hayek: "The curious task of economics | is to demonstrate to men how little they really know about what they imagine they can design". |

| davinci | Russ, what do you think Nassim Taleb's idea of skin in the game? Russ Roberts responds: | Well, I think it's a very deep idea. I think it's a very deep idea for a lot of reasons. One of which is that it's very related to our conversation today about the value of information. Because what he is saying is that if you are in a position of giving advice or making decisions, you should have some of the consequences of those decisions. So, if you are an investment banker giving advice to a company about, say, an acquisition, you should have some of your own money at stake in that decision. If you are a policymaker making decisions about the future of the country, you should have some of your own wealth at stake. And so, I think that's a very deep idea. I think it's true. I think it's very--it's a very challenging idea. And I think it's very--it's a very powerful idea. Because, of course, it means that a lot of people who are making decisions don't have skin in the game. And that's very--that's a very bad thing. |

| davinci | In what way does understanding the challenge around "creating a prairie" align with F A Hayek's famous maxim about the curious task of economics? Russ Roberts responds: | Well, Hayek's maxim is that "the curious task of economics is to demonstrate to man how little he really knows about what he imagines he can design. And I think that is true of policy generally. Policy is a process of trying to orchestrate things that we don't understand very well. And prairie restoration is a good example of that. We don't understand how prairies come about. We don't understand the processes that created them in the past, so we don't really know how to recreate them. And so it's interesting to do it as an experiment. It's interesting to try to understand what's going on. But it's a very, very difficult challenge. And I think that's true of most policy. It's certainly true of macroeconomic policy. It's true of international policy. It's very, very hard to orchestrate things that we don't understand very well. |

| davinci | Why is it harder to "create a prairie" then it seems? Russ Roberts responds: | Well, I think the biggest challenge is that it's a complex system. And, you know, we've talked a lot on this program about how economists tend to overuse the word 'system' and they tend to overuse the word 'complex. But, it's really hard to create a prairie, because prairies are very highly adapted to the climate and the soil and the particular species that are there. And, you can't just put the seeds in the ground and expect to get a prairie. They are a complex interaction of many different species of plants and animals that have evolved together. And, prairies are a classic example of what we now call an 'emergent system. And, an emergent system is one where the whole is greater than the sum of its parts. And, prairie is a classic example of that. And, prairie is also a classic example of what we now call a 'complex adaptive system. And, complex adaptive systems, we've talked about on the program before with Nassim Taleb and others. But, complex adaptive systems are systems where the initial conditions are so important that you can't just start over. You can't just reset the system to the starting point and expect |

| davinci | As a free market economist, why are you drawn to the idea of the "Chesterton Fence"? Russ Roberts responds: | Well, the Chesterton fence is a fence that doesn't do what it was intended to do, but it has an unintended consequence that's good. And the idea is that Chesterton is talking about someone who wants to fence off their field. And Chesterton says, 'Well, why do you want to do that? It's a good idea. It's a fence; it keeps the cows in. But what about the cows that are on the other side of the fence? They can't get in. So, Chesterton's point is that we need to sometimes look at the unintended consequences of our actions. And, the problem I have with most regulations is that the people who are harmed by those regulations are not the same as the people who are helped. And, it's very hard to see the benefits. If you look at a regulation, like the Dodd-Frank legislation that was passed in the United States, there are people who are helped by that legislation. There are people who are working in the industry who are benefiting from that legislation. But, there are a lot of other people who are hurt by it. And, it's hard to see the benefits. And, so, I'm a big fan of the idea that |

| davinci | You often say that economists have a sense of humor because they use decimal points, why is that? Russ Roberts responds: | Because we are deeply inadequate in our ability to understand the real world. And the decimal point gives us a sense that we are being precise when we really have no idea what we are talking about. The decimal point allows us to create the illusion of knowledge where there is only ignorance. And so, whenever I--we economists are very prone to say something like, 'Well, we have a sense of humor because we use decimal points. I don't have a sense of humor because I use decimal points. I have a sense of humor because I'm in a profession that is deeply inadequate to describe the world as it is. And so that's the joke. And I think the joke is on me. And I think the joke is on my profession. And I think that's a good thing. I think it's healthy to acknowledge that we are in some sense in the dark. We are groping. We are stumbling. We are making things up. We are using our intuition. We are using our experience. But we are not doing--it's not a science. It's not like physics. And I think it's healthy to be honest about that. |

| davinci | Who are some guests you interviewed in 2014? Russ Roberts responds: | Well, we interviewed Arnold Kling, who is a regular. He's a frequent commentator on the EconTalk podcast. We interviewed Laura Tyson, who is a very smart economist. She was the Chair of the Council of Economic Advisers in the Clinton Administration. We interviewed Gary Becker, who is an old friend of mine and a Nobel Prize winner in economics. We interviewed Barry Lynn, who is the head of the New America Foundation, which is a think tank here in Washington. And we interviewed David Henderson, who is a regular, and a very good economist. |

curie

The curie model performed poorly in baseline experiments. However, because the model is about 1/10th of the cost of the davinci, it was given significantly more data during its training (830K vs 83K tokens). Unfortunately this extra data did not translate to better answers. As the table shows below, the fined-tuned version of the model does give convincing sounding sentences, but the content is itself quite poor. The only questions the model was able to do well at was to complete the Adam Smith quote.

Table 3B: Curie's Q&A

| model | prompt | completion |

|---|---|---|

| curie | Do you mention the "Bootlegger and Baptist" theory often? Russ Roberts responds: | I don't think so. I used it in the book. It's a good example of the way that economics and social science and history have a lot in common. It's often said that the Bootlegger and Baptist theory is the attempt to justify what the politicians are doing. And I think it's the other way around. I think it's the politicians who justify what they want to do by appealing to the Bootlegger and Baptist theory. In a way, it's a way to make yourself feel good about what you're doing. And it's really hard to fight against it. It's hard to say, 'Well, I don't want to do this because it's bad for the poor. I think that's a really good argument. But most of us don't think that way. Most of us think, 'Well, it's not so bad on the poor. We'll take care of them. We'll give them a handout. We'll have a tax cut for them. We'll help them. We'll do this. And the politicians, of course, are aware of that. They appeal to that. And I think it's a very deep and powerful phenomenon. |

| curie | As a proponent of free markets, are you attracted to the "Bootlegger and Baptist" theory? Russ Roberts responds: | Well, I'm not a big fan of the Bootlegger and Baptist theory, but I'm not sure it's a good theory either. I think the empirical evidence that--I'm not sure it's true. I think it's true that some people are more likely to give money to a politician that they think is going to help their own self-interested, ideological, ideological self. I think it's true that there are people out there who are crony capitalists. I think it's certainly true that there are people out there who are ideological, who are more likely to support politicians that are ideologically like them, and so on. I think there's some truth to the idea that some people are more likely to support politicians that are ideologically like them. And I think it's true that there are people out there who are crony capitalists. I think it's certainly true that there are people out there who are ideological, who are more likely to support politicians that are ideologically like them, and so on. I think there are real issues there. I'm not sure it's the right theory, but I think it's true. |

| curie | How skeptical are you of empirical research published in academic journals? Russ Roberts responds: | I'm skeptical of most of it. I think there's some really good work that gets published in those journals. But I think there's a lot of work that's just not very good. I think the peer review process is very imperfect. I think that's true in economics as well as other fields. I think that's true in psychology as well; I think there's a lot of psychological research that gets published that is not of very much value. I find it a little bit of an insult to my intelligence to be honest. I can't imagine sitting in the armchair and feeling, 'Well, this was a good study, this was a bad study. I think a lot of the studies are flawed. I think it's very hard to tease out the true effect of a policy change in, say, a randomized control trial, that's where I think a lot of the work gets published. I think the sample is not large enough, I don't think the controls are good enough. I think there's a lot of mistakes in the analysis and the cherry picking of the data. I think it's a very imperfect science. I think the peer review process is not good. I think the journals are not good publications--except |

| curie | Do you believe that "data can speak for itself" as a general principle? Russ Roberts responds: | Well, I'm skeptical about that. I think the question is: Is it true? So, you know, the question is: Do we have enough evidence to demonstrate that one intervention works better than another? And I think we need to be honest about that. And I think we need to be honest about the fact that there are going to be biases in our data sets. We need to be careful about how we try to control for those. So, I have some--I'm a big fan of experimental economics. And I think experimental economics is very important. I don't think randomized control trials are the be-all and end-all. I think we need to be honest about the fact that what works in one place is not necessarily going to work in another place. So, I think there's a lot of issues that we've got to be honest about. I'm not a big fan of the phrase 'data can speak for itself. That's a phrase that sometimes comes up in the literature. And I understand the appeal of it. I think it's true--that if you have a large enough sample, you can make strong claims about the average impact of an intervention. But the phrase itself--I think it's over |

| curie | How would Russ Roberts finish the following sentence from Adam Smith: "Man desires not only to be loved | but to be lovely. " |

| curie | Finish the following sentence by FA Hayek: "The curious task of economics | is to demonstrate to men how little they can be relied upon to know about what they imagine they know. " |

| curie | Why do you think Nassim Taleb's "skin in the game" is a deep thought? Russ Roberts responds: | Well, I don't know about that, but it's a nice thought. I think it goes back to the issue of self-interest versus public interest. The idea of living in a system where I'm not insulated from the consequences of my actions and I have to take a little bit of risk--it's a very deep idea that I think is very powerful. And I think it's very difficult to implement. I think it's a nice thought--but I don't know if it's a good idea. It's hard to know. |

| curie | In what way does understanding the challenge around "creating a prairie" align with F A Hayek's famous maxim about the curious task of economics? Russ Roberts responds: | Well, I think there's a couple of lessons in there for us. I think the first is that a lot of what we think of as our knowledge, our understanding, is not just false but of limited use. So, when we think we have a grasp on some particular issue, we often are just fooling ourselves. And I think that's a very important lesson. I think the second lesson is that we have to be humble enough to realize that we don't have the answers, and we have to be open enough to realize that we may be wrong. And I think that's very important. But the third part of that is, I think, the most important part. And that is that we have to be willing to change our mind. And I think one of the hallmarks of a good economist is that you are open to that. I think most economists I know are very skeptical about their own knowledge and the knowledge of their colleagues. And I think that's a good thing. But I think the reason you have to be open to that is because the world changes. And what you thought was true yesterday may not be true today. And it's good to be open to that. |

| curie | Why is it harder to "create a prairie" then it seems? Russ Roberts responds: | Well, that's a really interesting question. And, I think part of the answer is that it is harder to create a prairie. It's hard to convert grassland into prairie. It's not that hard to convert prairie into a field again. But, that's not what we're talking about here. The question is, why is it hard to create a prairie? Why is it hard to create a prairie that has a different--a different ecological function? And, I think the answer to that is that, as you said, it's not just the grasses and the prairie. It's the soil, it's the insects, it's the wildlife. It's a whole ecosystem that comes together to make a prairie. And, the fact that it's more complicated is really the reason that the prairie has survived for so long. |

| curie | As a free market economist, why are you drawn to the idea of the "Chesterton Fence"? Russ Roberts responds: | Well, there are two parts to that. One is that, as an economist, I'm skeptical of the ability of government to solve problems. So, I'm very much in favor of limited government. And the other part of that is that I'm skeptical of what I call 'redistribution. So, the idea that government would take money from one group of people and give it to another group of people is deeply, deeply troubling to me. So, the question is: How do we justify that? How do we justify the fact that we have a safety net? How do we justify that the government has the power to take money from people who are rich and give it to people who are poor? And I think the answer--it's a very good question, and I think the answer is that it's not just the poor who are poor. It's the rich too. It's the middle class. And they suffer the same challenges. And so, the idea that we would do that, I think, is immoral. It's a bad idea. And I think it's deeply immoral. So, that's the first part of the story. The second part of the story is that, as I said, I think the |

| curie | Do macroeconomists have a sense of humor? Russ Roberts responds: | Well, I'm an economist, so I have a sense of humor. But I'm not the only one. I'm not the only one. I think the profession is rather grim. I think we have a lot of good empirical work being done. I think we're much more aware of the data than we were a decade ago. I think we have a much better understanding of what's going on around the world. But I think the macro side of economics is still stuck in a time warp. We're still romanticizing the gold standard, interest rates, Say's Law. And we have a hard time understanding the role of monetary policy in the current environment. I think we have a long way to go. |

| curie | Who are some guests from EconTalk from the year 2014? Russ Roberts responds: | Well, I think my favorite guest of the year is probably Jim Otteson, who is a professor at the University of Georgia. And, he and I have had a number of conversations on the EconTalk site, and he's a very interesting person. And, I think the other person I'd mention is my friend and co-host, Dave Feldman. Dave is a very interesting economist. We've had some conversations over the years, and he's a great guest. I think my guests from the year 2014 are Jim Otteson and Dave Feldman. Russ Roberts responds: I'm going to add one more. I'm going to add one more guest, which is Michael Munger. Michael Munger is a very, very, very smart person. He's a philosopher, but he also has a deep understanding of economics. And, our conversation on his book, What's So Great About Christianity, is a classic. |

Ada/Babbage

The two smallest models were trained on most/all of the corpus with laughably bad results. The models will latch on to one or two words from the prompt and then provide a bizarre riff that sounds nothing like Russ or a conversation on the podcast. I won’t bother to include their output here, but readers can see their responses here.

(6) Conclusion

LLMs like OpenAI’s text-davinci-003 are remarkably capable at sounding like public personalities after training on a small number of samples. One could imagine all sorts of fun, useful, or malicious ways this could be done to create personality-specific sounding bots from the White House Press Secretary to Joe Rogan. Keep in mind that my experiment used only 2% of the dataset I put together (i.e. 83K tokens and 204 conversation pairs). Training on the entire dataset (4.8 million tokens and 10K conversation pairs) would no doubt produce even more impressive results. Furthermore, the way the prompt/completions were specified could likely be improved to provide more context, and a way of further refining the trained model output through embeddings could also be useful.

However, there are still many drawbacks. The smaller models (ada & babbage) do not appear to learn anything useful from fine-tuning, and the curie model is not fully able to understand the key idea(s) found in the prompt. This suggests a scaling law in favour of model size over dataset size, which aligns with OpenAI’s earlier paper, rather than DeepMind’s Chinchilla experiments. However, the aforementioned result is not exactly comparable since the “budget” I’m referring to is the training cost OpenAI charges, rather than the number of flops.

Overall, I was impressed by the ease of using the OpenAI API, and found myself often going between the command line and the GUI playground found on their website. My main critique of OpenAI’s tools is that they have not provided any seeding functionality, meaning these experiments are not reproducible by other researchers (or even the same researcher on a different day!). Interested readers can create their own versions of EconChatR by cloning this repo and running the main pipeline.

A set of fun experiments for \\$40 USD

Footnotes

-

Here are some fairly emblematic headlines that have come out on social and traditional media platforms: “Google is done. Here’s why OpenAI’s ChatGPT Will Be a Game Changer”, “ChatGPT is a game changer for artificial intelligence”, “ChatGPT is a GAME CHANGER!”. ↩

-

The model has ~170B parameters and was trained on about 300 billion tokens (where 5 tokens averages about 4 words). ↩

-

It would likely take 8 high-end GPUs to run a model of this size on a local machine (each GPU would cost ~\$15K). Furthermore, it has been estimated that GPT-3 would have cost \$5 million USD to train and one popular estimate has pegged the cost of running ChatGPT at \$3 million USD a month. ↩

-

Note that these numbers are slightly different than what is seen above for the reason that the number of pairs (

n_pairs) column is based on an estimate rather than an actual value, since some pairs will have more/fewer tokens than others. The final number quoted is based on the model-specific training set that gets generated by rank-ordering the 12 different string match types from the smallest to the largest number of tokens. ↩